Congestion management policies

Queuing is a common congestion management technique. It classifies traffic into queues and picks out packets from each queue by using a certain algorithm. Various queuing algorithms are available, and each addresses a particular network traffic problem. Your choice of algorithm significantly affects bandwidth assignment, delay, and jitter.

Congestion management involves queue creating, traffic classification, packet enqueuing, and queue scheduling. Queue scheduling treats packets with different priorities differently to transmit high-priority packets preferentially.

This section briefly describes several common queue-scheduling mechanisms.

FIFO

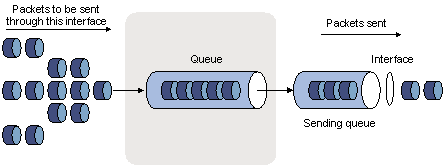

Figure 14: FIFO queuing

As shown in Figure 14, the first in first out (FIFO) uses a single queue and does not classify traffic or schedule queues. FIFO delivers packets depending on their arrival order, with the one arriving earlier scheduled first. The only concern of FIFO is queue length, which affects delay and packet loss rate. On a device, resources are assigned for packets depending on their arrival order and load status of the device. The best-effort service model uses FIFO queuing.

FIFO does not address congestion problems. If only one FIFO output/input queue exists on a port, you can hardly ensure timely delivery of mission-critical or delay-sensitive traffic or smooth traffic jitter. The situation is worsened if malicious traffic is present to occupy bandwidth aggressively. To control congestion and prioritize forwarding of critical traffic, use other queue scheduling mechanisms, where multiple queues can be configured. Within each queue, however, FIFO is still used.

By default, FIFO queuing is used on interfaces.

Priority queuing

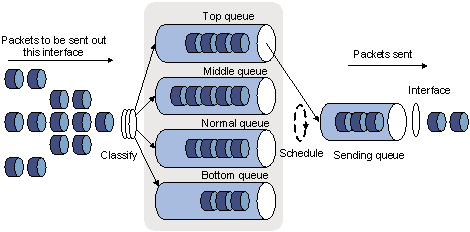

Figure 15: Priority queuing (PQ)

Priority queuing is designed for mission-critical applications. The key feature of mission-critical applications is they require preferential service to reduce the response delay when congestion occurs. Priority queuing can flexibly determine the order of forwarding packets by network protocol (for example, IP and IPX), incoming interface, packet length, source/destination address, and so on. Priority queuing classifies packets into four queues: top, middle, normal, and bottom, in descending priority order. By default, packets are assigned to the normal queue. Each of the four queues is a FIFO queue.

Priority queuing schedules the four queues in the descending order of priority. It sends packets in the queue with the highest priority first. When the queue with the highest priority is empty, it sends packets in the queue with the second highest priority. In this way, you can assign the mission-critical packets to the high priority queue to make sure that they are always served first. The common service packets are assigned to the low priority queues and transmitted when the high priority queues are empty.

The disadvantage of priority queuing is that packets in the lower priority queues cannot be transmitted if packets exist in the higher queues for a long time when congestion occurs.

Custom queuing

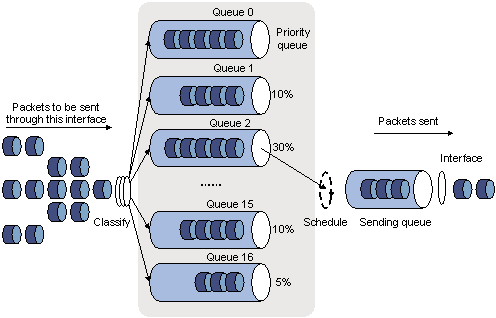

Figure 16: Custom queuing (CQ)

CQ provides 17 queues, numbered from 0 to 16. Queue 0 is a reserved system queue, and queues 1 through 16 are customer queues, as shown in Figure 16. You can define traffic classification rules and assign a percentage of interface/PVC bandwidth for each customer queue. By default, packets are assigned to queue 1.

During a cycle of queue scheduling, CQ first empties the system queue. Then, it schedules the 16 queues in a round robin way: it sends a certain number of packets (based on the percentage of interface bandwidth assigned for each queue) out of each queue in the ascending order of queue 1 to queue 16. CQ guarantees normal packets a certain amount of bandwidth, and ensures that mission-critical packets are assigned more bandwidth.

CQ can assign free bandwidth of idle queues to busy queues. Even though it performs round robin queue scheduling, CQ does no assign fixed time slots for the queues. If a queue is empty, CQ immediately moves to the next queue. When a class does not have packets, the bandwidth for other classes increases.

Weighted fair queuing

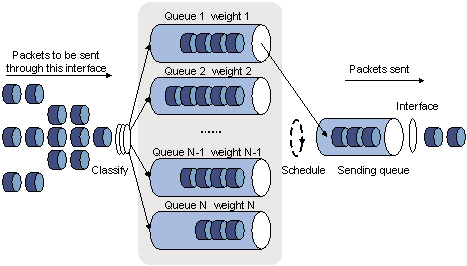

Figure 17: Weighted fair queuing (WFQ)

Before WFQ is introduced, make sure that you have understood fair queuing (FQ). FQ is designed for fairly allocating network resources to reduce delay and jitter of each traffic flow as possible. In an attempt to balance the interests of all parties, FQ follows these principles:

Different queues have fair dispatching opportunities for delay balancing among streams.

Short packets and long packets are fairly scheduled: if long packets and short packets exist in queues, statistically the short packets must be scheduled preferentially to reduce the jitter between packets on the whole.

Compared with FQ, WFQ takes weights into account when determining the queue scheduling order. Statistically, WFQ gives high-priority traffic more scheduling opportunities than low-priority traffic. WFQ can automatically classify traffic according to the "session" information of traffic (protocol type, TCP or UDP source/destination port numbers, source/destination IP addresses, IP precedence bits in the ToS field, and so on), and try to provide as many queues as possible so that each traffic flow can be put into these queues to balance the delay of every traffic flow on a whole. When dequeuing packets, WFQ assigns the outgoing interface bandwidth to each traffic flow by precedence. The higher precedence value a traffic flow has, the more bandwidth it gets.

For example, assume five flows exist in the current interface with precedence 0, 1, 2, 3, and 4, respectively. The total bandwidth quota is the sum of all the (precedence value + 1)s, 1 + 2 + 3 + 4 + 5 = 15.

The bandwidth percentage assigned to each flow is (precedence value of the flow + 1)/total bandwidth quota. The bandwidth percentages for flows are 1/15, 2/15, 3/15, 4/15, and 5/15, respectively.

Because WFQ can balance the delay and jitter of each flow when congestion occurs, it is suitable for handling some special occasions. For example, WFQ is used in the assured forwarding (AF) services of the RSVP. In GTS, WFQ schedules buffered packets.

CBQ

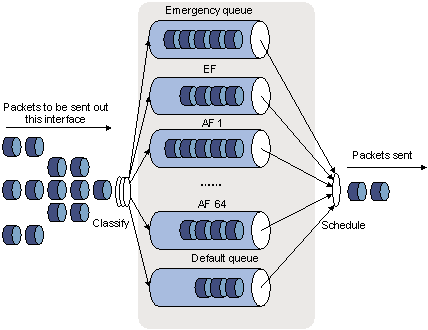

Figure 18: CBQ

Class-based queuing (CBQ) extends WFQ by supporting user-defined classes. When network congestion occurs, CBQ uses user-defined traffic match criteria to enqueue packets. Before that, congestion avoidance actions, such as tail drop or WRED and bandwidth restriction check, are performed before packets are enqueued. When being dequeued, packets are scheduled by WFQ.

CBQ provides the following queues:

Emergency queue—Enqueues emergent packets. The emergency queue is a FIFO queue without bandwidth restriction.

Low Latency Queuing (LLQ)—An EF queue. Because packets are fairly treated in CBQ, delay-sensitive flows like video and voice packets might not be transmitted timely. To solve this problem, an EF queue was introduced to preferentially transmit delay-sensitive flows. LLQ combines PQ and CBQ to preferentially transmit delay-sensitive flows like voice packets. When defining traffic classes for LLQ, you can configure a class of packets to be preferentially transmitted. Such a class is called a "priority class." The packets of all priority classes are assigned to the same priority queue. Bandwidth restriction on each class of packets is checked before the packets are enqueued. During the dequeuing operation, packets in the priority queue are transmitted first. To reduce the delay of the other queues except the priority queue, LLQ assigns the maximum available bandwidth for each priority class. The bandwidth value polices traffic during congestion. When no congestion is present, a priority class can use more than the bandwidth assigned to it. During congestion, the packets of each priority class exceeding the assigned bandwidth are discarded.

Bandwidth queuing (BQ)—An AF queue. The BQ provides strict, exact, guaranteed bandwidth for AF traffic, and schedules the AF classes proportionally. The system supports up to 64 AF queues.

Default queue—A WFQ queue. It transmits the BE traffic by using the remaining interface bandwidth.

The system matches packets with classification rules in the following order:

Match packets with priority classes and then the other classes.

Match packets with priority classes in the configuration order.

Match packets with other classes in the configuration order.

Match packets with classification rules in a class in the configuration order.

RTP priority queuing

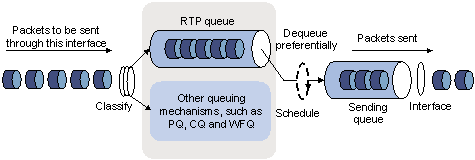

Real-time transport protocol (RTP) priority queuing is a simple queuing technique designed to guarantee QoS for real-time services (including voice and video services). It assigns RTP voice or video packets to high-priority queues for preferential sending, minimizing delay and jitter and ensuring QoS for voice or video services sensitive to delay.

Figure 19: RTP queuing

As shown in Figure 19, RTP priority queuing assigns RTP packets to a high-priority queue. An RTP packet is a UDP packet with an even destination port number in a configurable range. RTP priority queuing can be used in conjunction with any queuing (such as, FIFO, PQ, CQ, WFQ, and CBQ), and it always has the highest priority.

Do not use RTP priority queuing in conjunction with CBQ. LLQ of CBQ can also guarantee real-time service data transmission.